Toilet Blog Engine, Version 10

I've been improving this blog, despite not posting about it for 5 years. I've been quite active lately. My last post was about version 8, but now Toilet is on version 10. I'm not sure where to start with this, but let me describe some features that I've added. I've also dropped a few things, but that's for good reasons.

This blog used to run on Java EE, but that's old. It's now running on Jakarta EE. (It's the same thing, but different people are in charge of it now.) Since Google no longer requires AMP for their page carousel, I see no benefit to doing it anymore, and have dropped that. I took this opportunity to start using timestamps with timezones. Since we've finally made computers good at writing made up things, I've ripped out Spruce, my random sentence generator. Since it's the only part of the codebase that needs Jython, and since Spruce isn't necessary for a blog, I've let it go.

I've improved the blog's backups. There's a meme of servers storing logs and files but never deleting them, and one day the server is full and malfunctioning. I'll admit that my blog was like that, but I have so many gigs of free space that I didn't need to manually clean out old backups for months. I've fixed that. It will keep daily backups for a month, and monthly backups for 2 years, and delete the rest.

That was all version 9. I generally up the major version number when I make breaking changes to the database. Last year, I finally had enough of mysterious messages appearing in SSH's message of the day, particularly ones nagging me to upgrade to Ubuntu Pro (because I had the balls to run Ubuntu 20.04 in 2023). So I cleared it out for Debian 12. That upgraded Postgres to version 15, which supports generated columns. This feature creates derived data (like some parts of the search index) automatically but without a materialized view, so I did that, and released version 10. There were other improvements.

I noticed that the library I was using to hash passwords was not supported by its author, so I changed to one that is. This blog uses a somewhat unique security model that doesn't involve usernames. Instead, you're shown a different administration page depending on the password you enter. I decided that there were too many passwords, and started to cut down on them. While doing that, I realized that the code backing this was awful, so I refactored that into something better.

While reviewing old libraries, I changed my markdown code to use CommonMark-Java. This is a native Java library that supports lots of extensions, like Github formatted markdown. Now that my blog doesn't have any server-size Javascript, I can drop that interpreter from my Java code.

But what about speed? Let's compress stuff, so there's less data transferred! Brotli is an algorithm that's been around for about 10 years and is optimized for web traffic. I was able to find a Java library for that and use it. Zstd is a very different algorithm that's built to be very fast and multithreaded. I've been using zstd on the drive used for my server's file shares. Browsers recently started implementing it, and there's a Java library, so I did likewise.

But what about speed? Google Pagespeed was mocking me, telling me that my site could be better. It complained about image sizes. My base image size is around a quarter 1080p: 960×540 to 960×600. I decided to introduce images that were a quarter of that: 480×270 to 480×300. After that, Google loves my images. Because posts in the 'Since you've made it this far' area at the bottom of blog posts use the smallest available images, this lightened article pages, too. I've also implemented a lightbox feature, so when you click on an image (that's not a link), it expands and shows the largest image available.

But what about speed? A few weeks ago, I watched a video examining why this particular site is fast. One strategy used is loading a page in the background when the visitor mouses over a link, then swapping the page with that when clicked. I thought that was a brilliant idea, and did likewise. After doing that, I realized that if my site is preloading pages, it can search that pre-loaded page for better images and swap those in, and Google won't care, so I did that, too. Since I have a boatload of caching, I figure that it's mostly harmless to have someone hammer my server by jiggling their mouse all over a page. (Though if a preload takes more than 1 second, it's likely that the visitor's connection is bad, or my server is overloaded, so it stops preloading.)

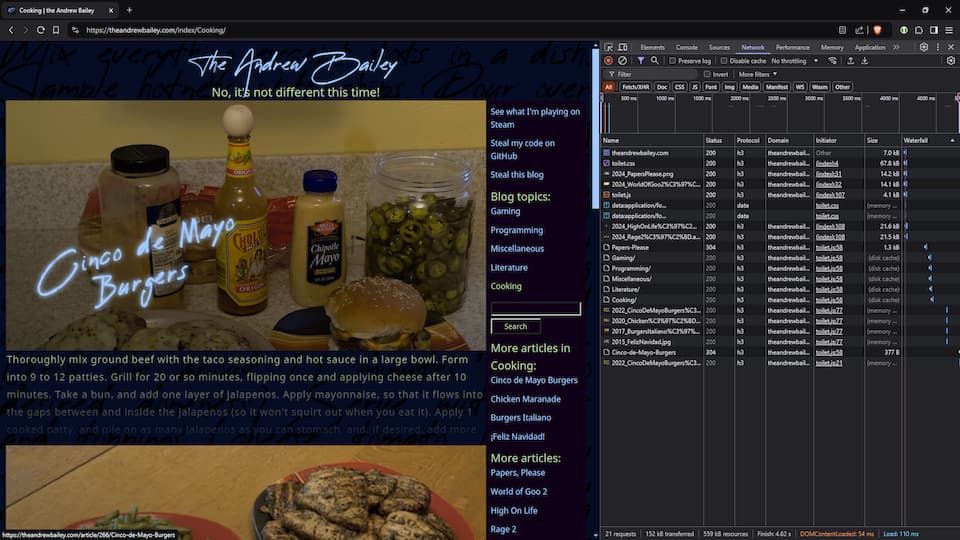

At some point, I came across the Server-Timing header. It's a way for the server to tell the browser how long it took for the server to create the page. I'm measuring how long it takes for queries to run, for back end processing, front end processing, and a few other things. I might have gone a bit crazy with these.

A friend of mine asked if I posted the source code for my blog somewhere. I gave him the link, then I panicked at the thought of him asking me how to set it up. That inspired me to write a deploy script, and write a readme file on Github to explain the rest of the manual steps. When I reinstall Debian (or whatever), I won't fear setting up this blog again!

Someday, I'll implement the ability to run multiple blogs from the same server. My idea is to have multiple databases, each one with a different blog's data, the connections registered with the server, and requests routed by (sub-)domain name. However, I can't figure out how to automatically set up those databases like it does for the first/primary/normal one. I guess it will remain a pipe dream for now.

Outside of the code, I used to run Payara directly on the internet, but now all of my web server traffic runs through HAProxy. This handles TLS termination, does some caching, and forwards to other servers based on domain names. For example, I've set up nginx to serve my files over WebDAV, so I can access my files with a browser. (I've experimented with IRC and video chat servers, but they aren't as useful as I hoped.) My previous setup (running Payara directly) wouldn't easily do that. In addition, HAProxy supports better TLS than Payara, and I'm not held back by Java's TLS support anymore. I've been running TLS 1.3 for a long time, and recently, HTTP/3! While I understand how HTTP/3 works, I'm not convinced that it's a big improvement 99+% of the time, but having it doesn't hurt anything.

AVIF is fully supported on current versions of Chrome, Safari, and Firefox. Since it has better per-byte efficiency than JPEG, I don't do 1080p JPEGs anymore, but I'll keep base resolution JPEGs for backwards compatibility. To reduce the pain of having to resize and encode different versions of every image in my posts, I wrote a script, avifify.sh, that automates what I was doing manually. While I'm gung-ho on AVIF, I'm aware of JPEG XL. I've even implemented it (and WebP) in that script. Chrome once had an experimental implementation of JPEG XL, but dropped it, drawing disapproval from many. After playing around with that script for hours, it's clear that small (<1 bit per pixel) AVIF images can look acceptable, but JPEG XL images of the same file size are not. Unless JPEG XL dramatically improves there, I concede that Chrome's call is the right one.

That's all I got for now. I wonder what other feature will come along that I'll be dying to implement.